Fortunately Microsoft has included SqlBulkCopy in the SqlClient for ADO.NET 2.0

In my testing. I loaded Australia's PostCode list from csv file, consisting of 16,789 rows.

I compared SqlAdapter.Fill(), SqlAdapterFill() + Setting the UpdateBatchSize property, and finally I used SqlBulkCopy.

First I read the csv file into in memory DataTable. For this purpose I read all rows in one go. In real live application, we would need to page the operation.

Then I push the DataTable into SqlServer 2005 table using 3 different methods.

- SqlAdapter.Fill

- SqlAdapter.Fill + Setting the UpdateBatchSize property = 500

- SqlBulkCopy

Option 2 took 9,636 ms

and .....

Option 3 took 568 ms

Wow,.....

I suspect that option 1 and option 2 difference would be more significant if the SQL Server is located accross network. In this test I use local SQL Server.

To do SqlBulkCopy is surprisingly simple:

You need only to specify the connection string, the datatable and the name of the destination table.

string connectionString = "....";

DataTable csvTable = PopulateTable();

SqlBulkCopy bcp = new SqlBulkCopy(connectionString );

bcp.DestinationTableName = "PostCodes";

bcp.WriteToServer(csvTable);

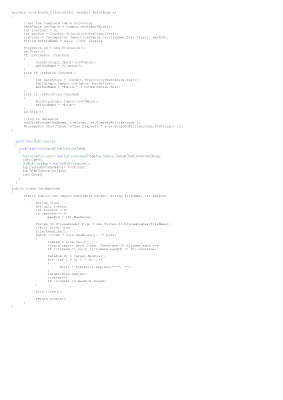

Here are snippet of the code from my Benchmark Harness, which is a winforms application